middle out compression (3/3)

trends: wealth gap and consumption and AI/GLP-1

Wealth Gap and Consumption

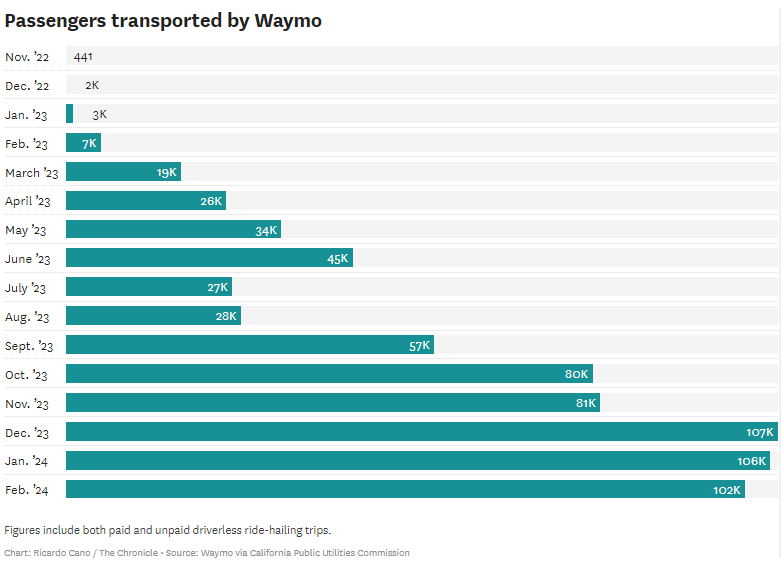

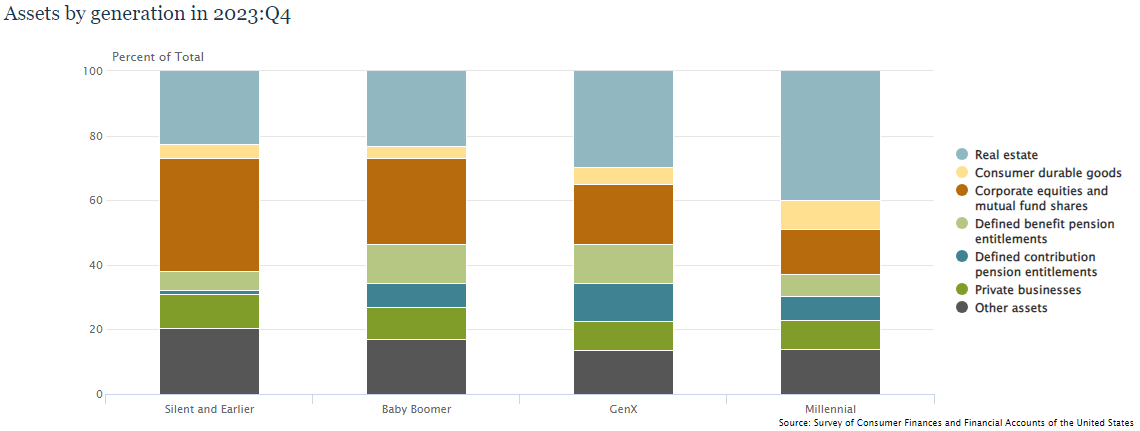

Here’s the wealth distribution:

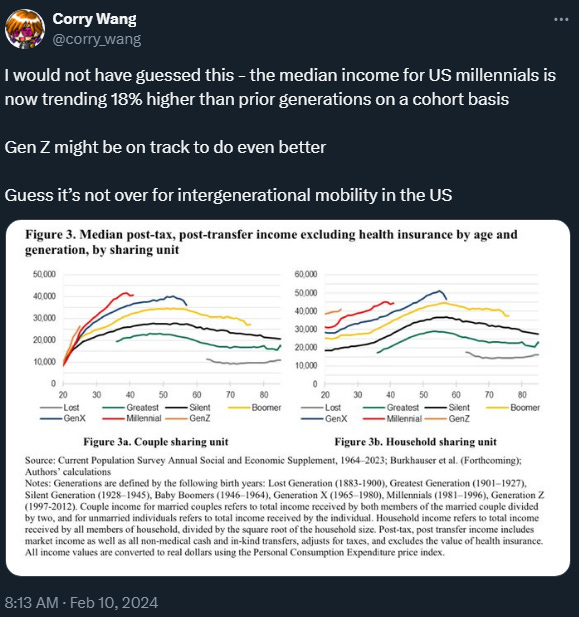

More and more people are disaggregating by generation and it has been nice to see, as demographics has needed more discourse. I believe it drives consumption patterns and explains many other dynamics that are happening, including labor redistribution and productivity growth. The wealth transfer from boomers is already happening, and I think it ends up causing a “shelter QT” dynamic which reduces consumption elsewhere.

I would like to emphasize “full employment” caused/is causing a redistribution of labor that is beneficial to the real economy, especially the lower income quartiles that experienced the most wage growth during the last few years. Even so, having assets vs not having assets continues to cause bad vibes, especially for real estate due to home affordability.

I am going to reiterate the July post:

Wealth being distributed so top heavy is not healthy. Nominal growth being sustained by the upper income quartiles is what gave rise to populism, emerging in the forms of Former President Donald Trump and Bernie Sanders. What has been increasingly apparent is that there exists a barrier to upward mobility and how to improve the quality of life for all. That barrier has taken the form of inflation.

It has been a surprise to see how little the K shaped economy is discussed with respect to monetary policy (especially in context of wealth effect). There are some folks that seem certain assets going up increases consumption and leads to reflation. I think it is going to confuse people when lower rates leads to lower inflation, especially as better affordability improves existing home sale transaction volume.

AI and GLP-1

If people took a step back, they might appreciate how “good” the economy is doing right now- especially with respect to housing, health, food and energy in aggregate. Does not mean we cannot improve on it even more. I believe we can.

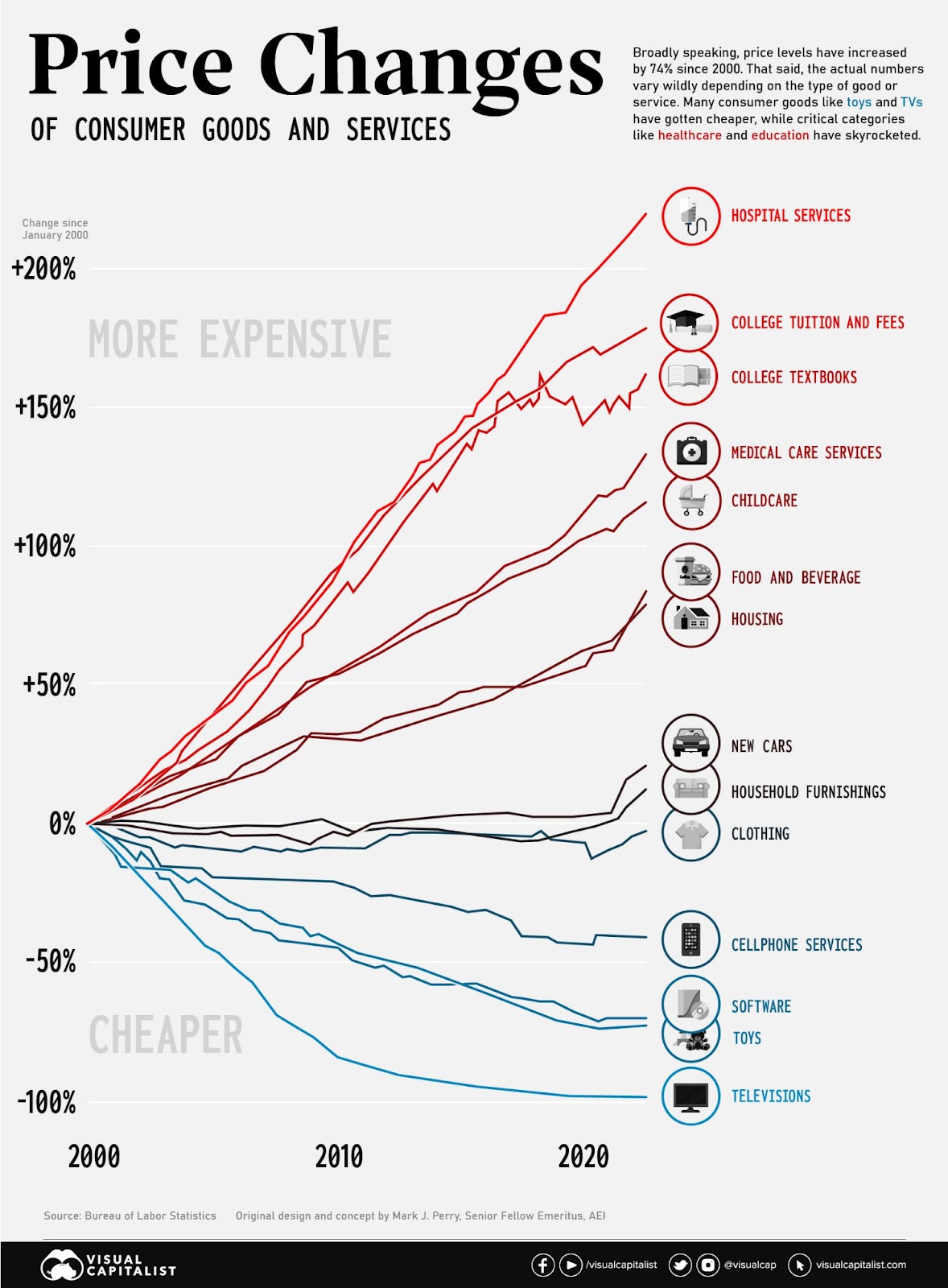

The top four items on the following price change chart will be addressed with AI (GPT+automation) and GLP-1. I had been leaving it a little room for debate, but the evidence has become overwhelming. Being bearish productivity growth 2024 is like forecasting recession in 2023 and Robert Gordon will be disappointed by the next decade.

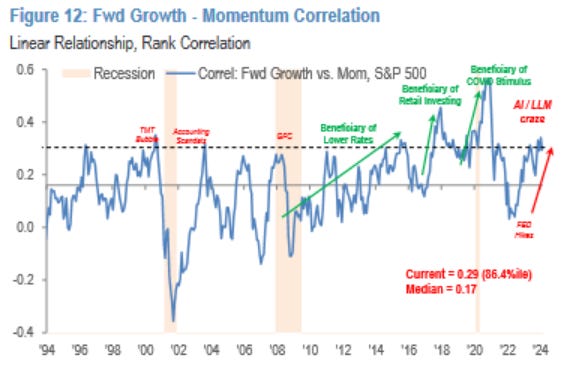

Let’s get this out of the way. Nvidia is probably going to do the chop/dip and get bought back thing for the next few quarters, as it digests its recent price appreciation. This is not meant to be a long NVDA 0.00%↑ write up. I am just updating my priors that the adoption rate of LLMs (Large Language Models) is well above expectations and the providing evidence that I believe supports that claim. Before that, here’s some charts to scare you away from going long.

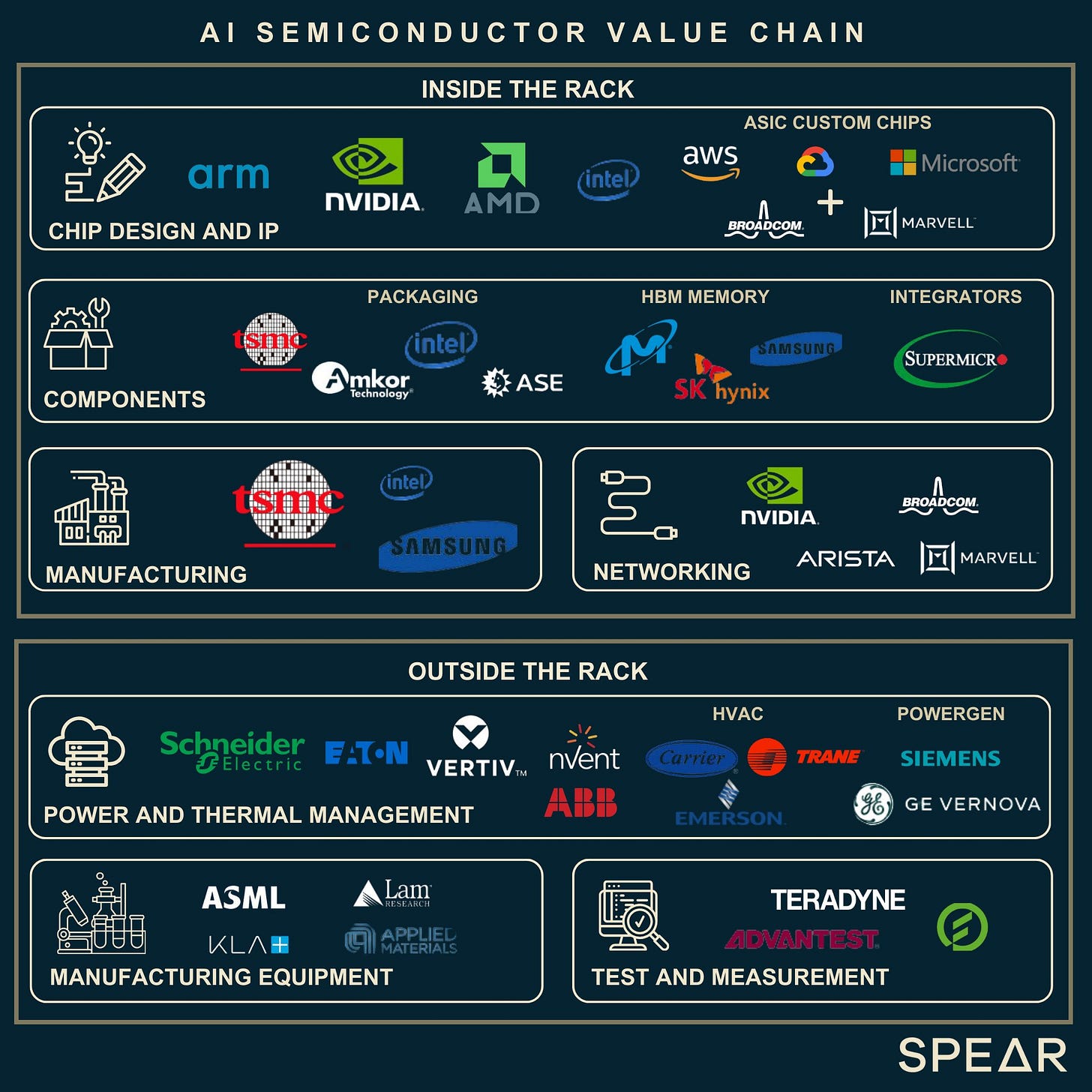

Everyone knows the “I” part of the LLM ROI thesis at this point, but these are still good charts breaking down the semiconductor value chain.

The semiconductor equipment billings chart is not what “late” cycle looks like, much to the dismay of many. Microsoft and OpenAI have dominated the cloud while Nvidia dominated the data center side of the AI landscape.

It’s not 1999, but it’s also not 1995 either. The only 1995 part is that Chair Powell is doing his Greenspan impression. In the meantime, revenue per employee continues to go up and IPOs are starting.

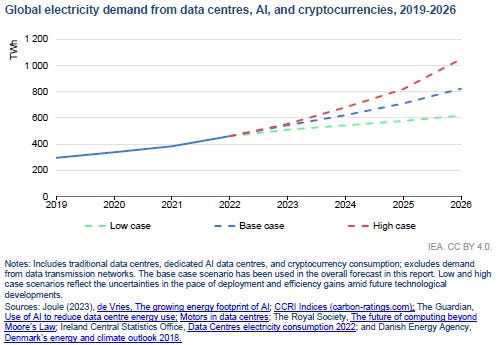

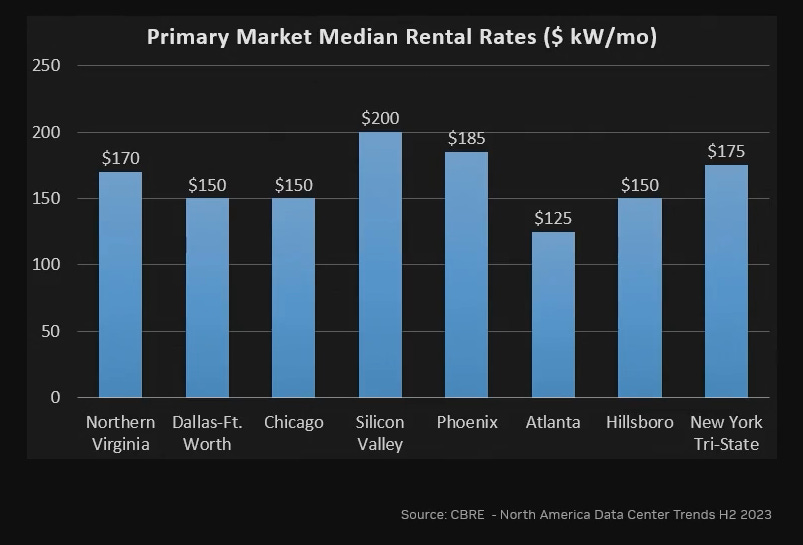

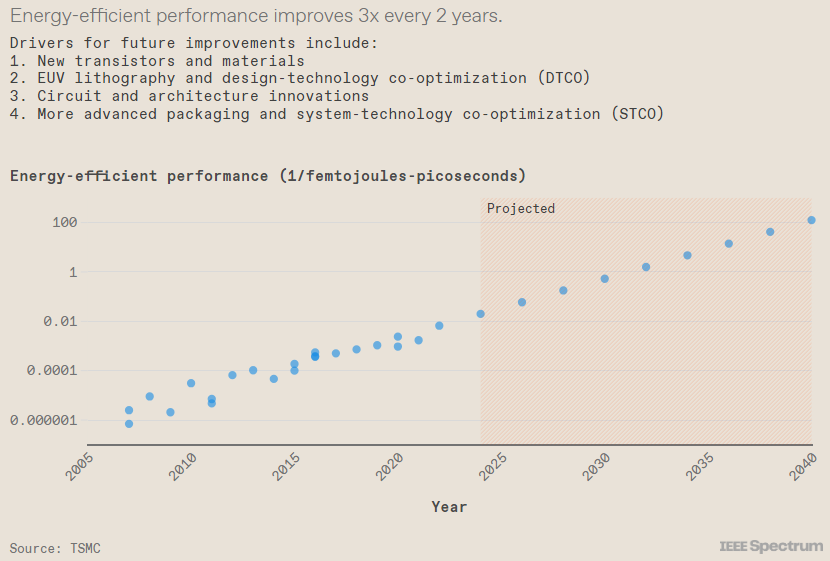

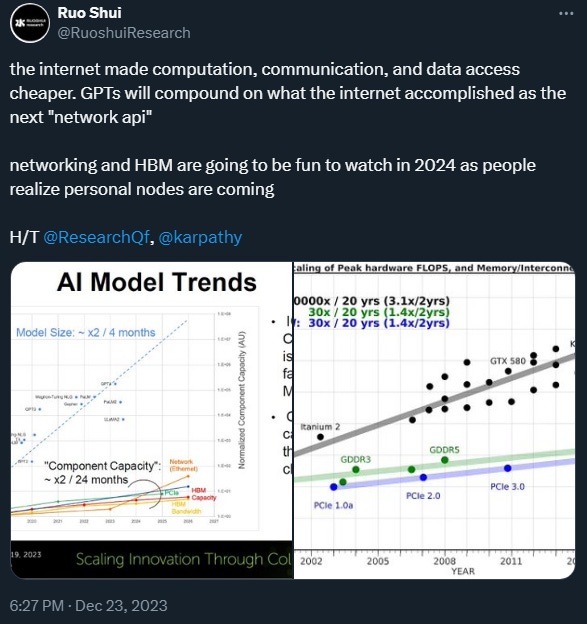

The amount of capex happening is likely to keep going until the typical diminishing returns appears, but that’s really hard to predict as compute is scarce and limited by energy. The bullwhip will happen but it is not in 2024. The AI labs capable of training foundational models are still in an arms race after witnessing Scaling Laws through Sora.

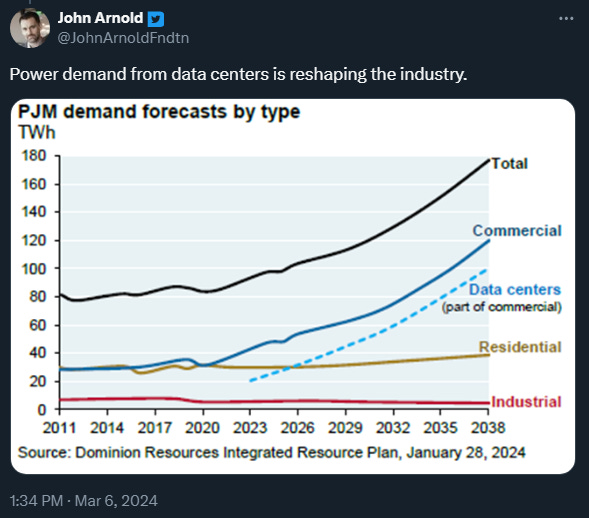

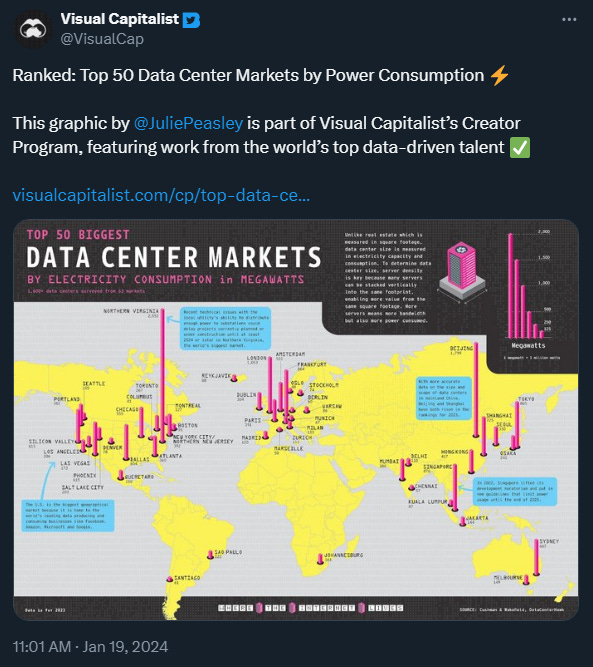

With data center growth, power demand is climbing as well. I am sure there are people trying to estimate the projected power consumption, but I think it is hard to estimate algorithm energy efficiency improvements, such as the recent Google Mixture-of-Depths transformer.

Make no mistake, this infrastructure has the full support of Uncle Sam and AI is the industrial policy. It is just a matter of how well future industrial policy distributes the growth that comes from this revolution. I have zero faith in the successor for Betsy DeVos for the next step the education system should be taking to adapt and train the future workforce.

Measurement units for compute are becoming entire racks as cluster density goes up. Moore's Law is not dead, it just took another form with respect to system of chips energy and thermal efficiency.

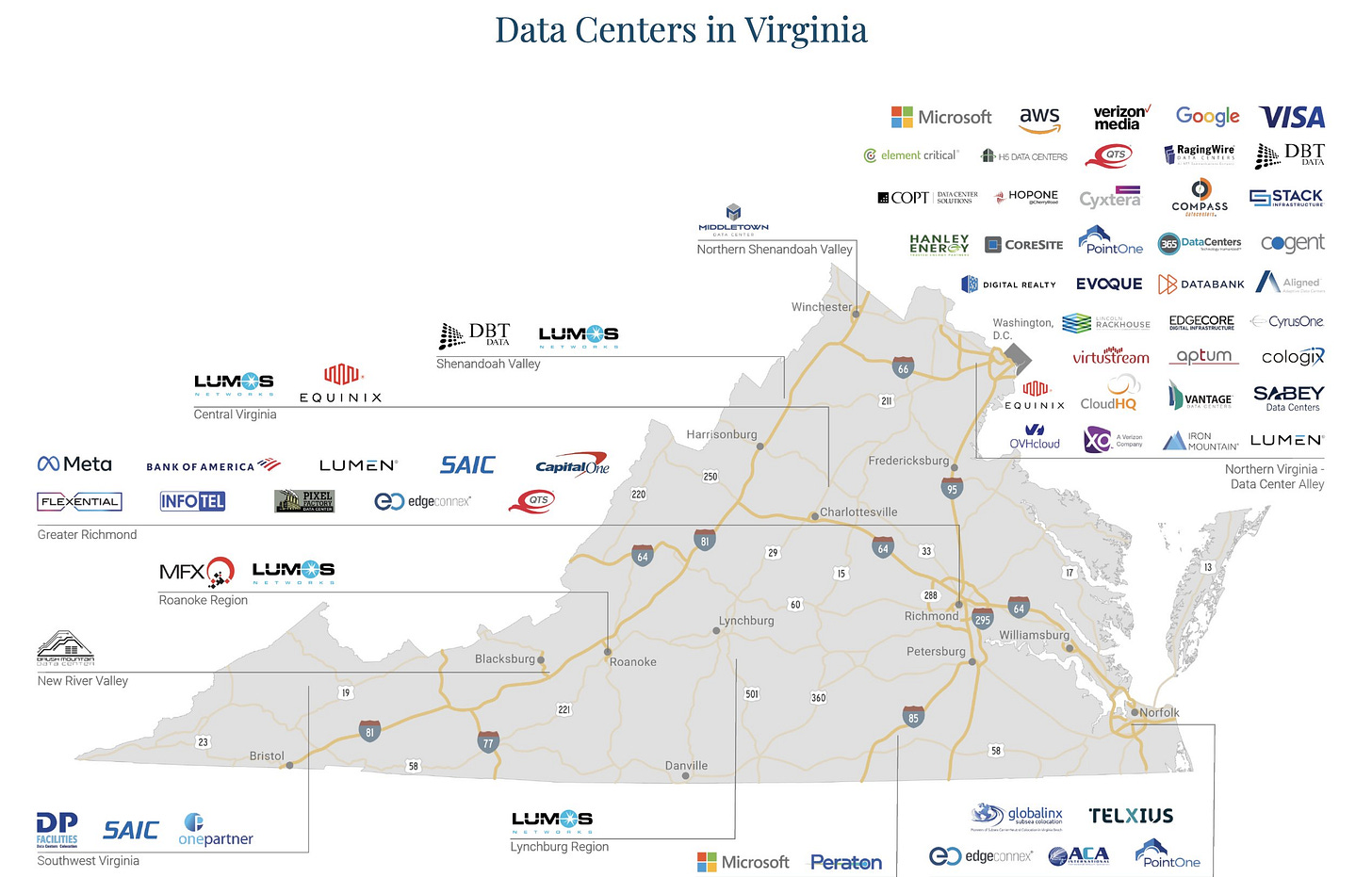

The data center growth/grid revamp opportunity and the tax code (TCJA) are likely to be the two fiscal policies addressed by the next administration. There is an opportunity here to build new cities and alleviate the housing shortage. The issue with trying to build in high density metros is a combination of zoning, regulation and NIMBYism. The government is probably the only actor that is capable of building out new infrastructure with a sustainable job market. Being walkable would just be a bonus, but remote work has already helped with that.

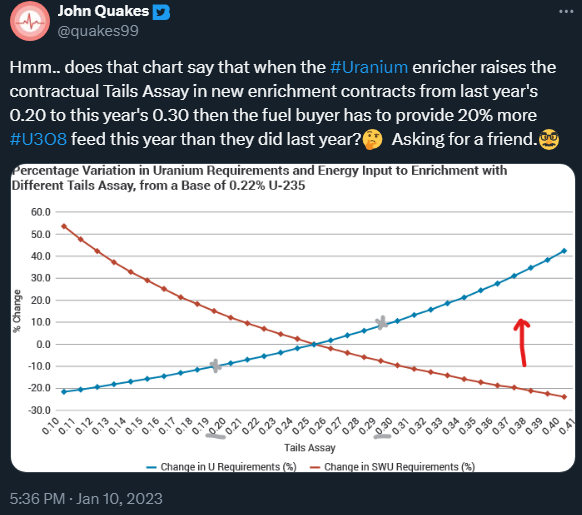

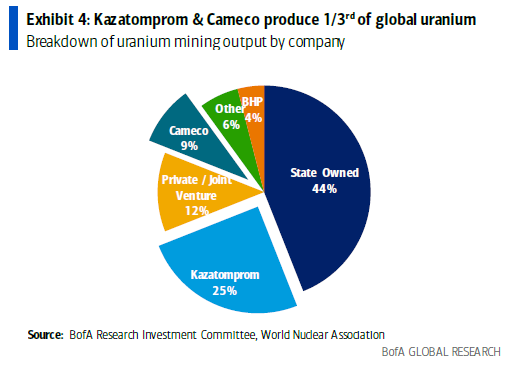

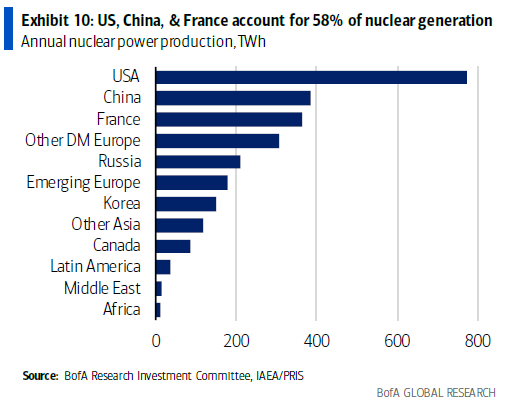

I have been holding off on writing about uranium, but finally caved. So similar to Nvidia, I want to say this is not a long uranium post. Bank of America provided a good collection of charts describing the tight supply driving price dynamics. Uranium provides the most stable and energy efficient solution. The world has noticed Japan pivoting their nuclear policy. The only argument against nuclear is sentiment and safety/waste. Sentiment is shifting and safety/waste are being addressed.

A recent development in renewables has been geothermal enthusiasm.

I believe technology is deflationary and this time will not be any different. The ability to leverage compression has led to the achievements of steam, internal combustion, turbofan/turbojet, and rocket engines (in increasing compression ratio). What happens when knowledge is compressed?

I find it interesting that some folks think productivity is not “real,” yet think monetary policy is not restrictive. The entire neutral rate discourse should be largely ignored with respect to Fed reaction function, and a higher neutral rate is a good thing.

I have been arguing for shift from labor to efficiency capex for quite some time and it has become evident. Here are a couple examples restaurants have figured out ways to improve efficiency without job loss.

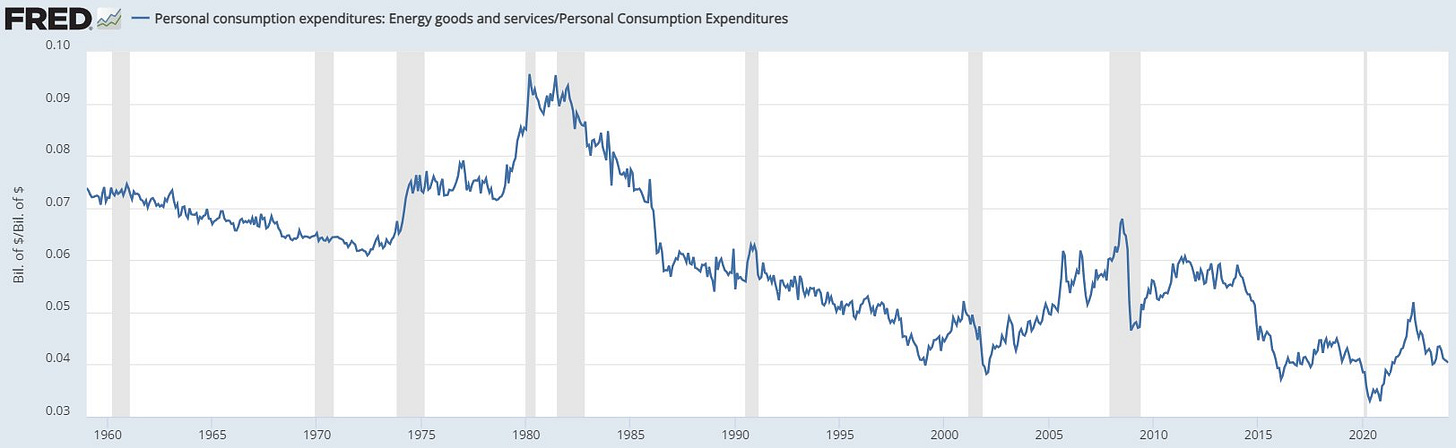

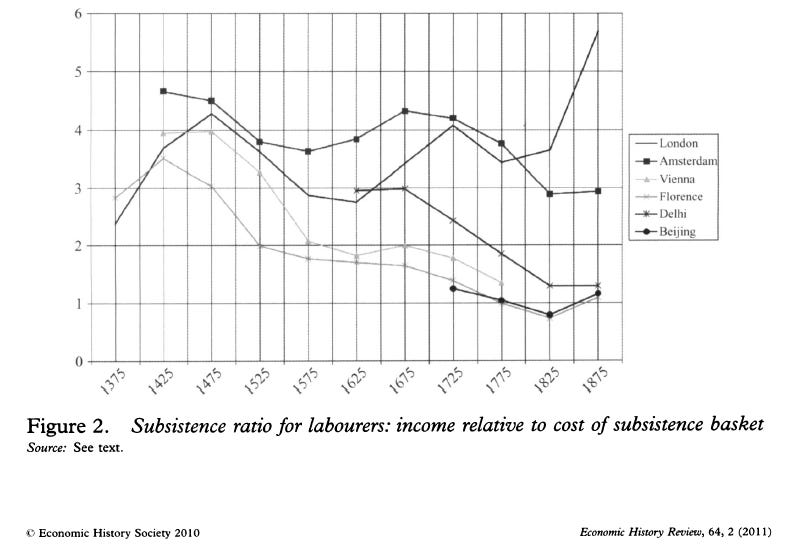

Let’s talk about the British since it provides further confirmation bias for my view. R.C. Allen argued that labor productivity growth (e.g. the spinning jenny and steam engine) arose from a high relative cost of labor to capital and energy. Does that sound familiar? It sounds like 2024 America. Yes, I would argue energy is cheap on the aggregate level based on percentage of overall consumption, especially given that the US is now net exporters of energy (frankly energy dominant).

The British Industrial Revolution offers an echo of current labor market and innovation dynamics. If this sounds familiar, it is because this is the Cobb and Douglas production function.

The kicker is there are two sources of productivity growth: GLP-1 and GPT-4. Even after a parabolic 2023, obesity market is still just getting started. Novo Nordisk’s oral amycretin results were presented 3/7/2024 and Wegovy also approved in the US for cardiovascular risk reduction in people with overweight or obesity and established cardiovascular disease. A study was released recently for Parkinson's disease and there is a new study into its effects for pregnancy. Meanwhile, programs run by Elevance, Kaiser Permanente and CVS Health have decided to extend coverage of the medicine for people with heart-related conditions under Medicare. Apparel is being compressed, while people are becoming slimmer and healthier. Incorporating “lifestyle changes” into employment coverages is how obesity drugs achieve even higher market penetration.

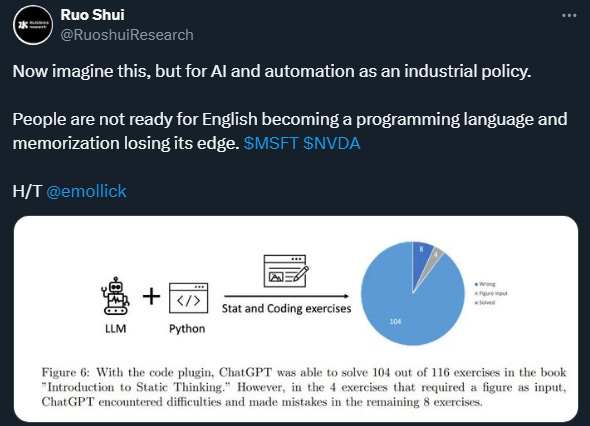

GPT-4 is already helping solve the skilled labor shortage. It still surprises me that some people do not know there is a built-in Python IDE within GPT-4 and Bing offers their finetuned version for free. Microsoft has since added a “Notebook” feature that provides increased 18K characters. It is time to bring balance sheets and compounding asset/debts to high school. Hopefully, IRS Direct makes the tax part easier. Data analysis is the low hanging fruit. That will only become even more obvious as Copilot in Excel improves with its Python/VBA calls and catches up to GPT-4 capabilities.

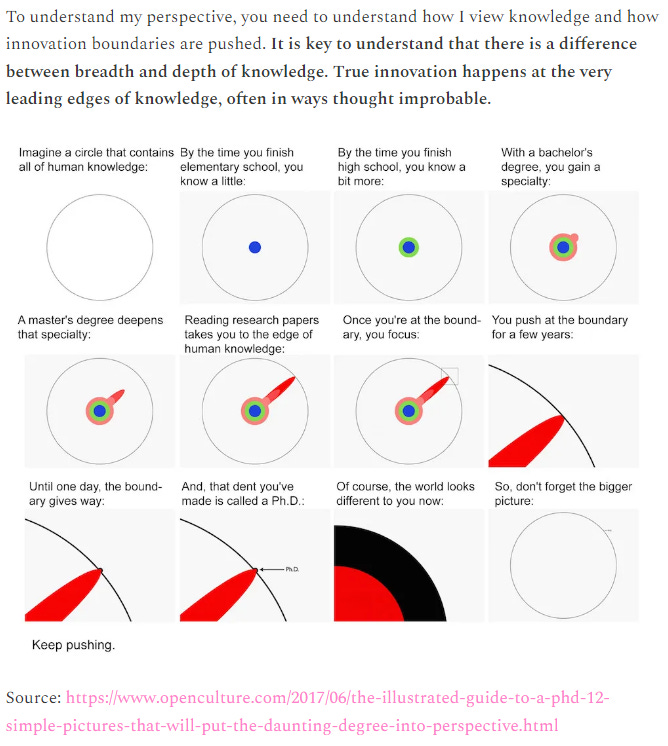

I am glad to see more people sharing the view that AI is actually beneficial for the wealth gap. I think it helps the higher skilled less and this is a good thing. Acting as a documentation tool, LLMs as a knowledge transfer tool alone is worth billions.

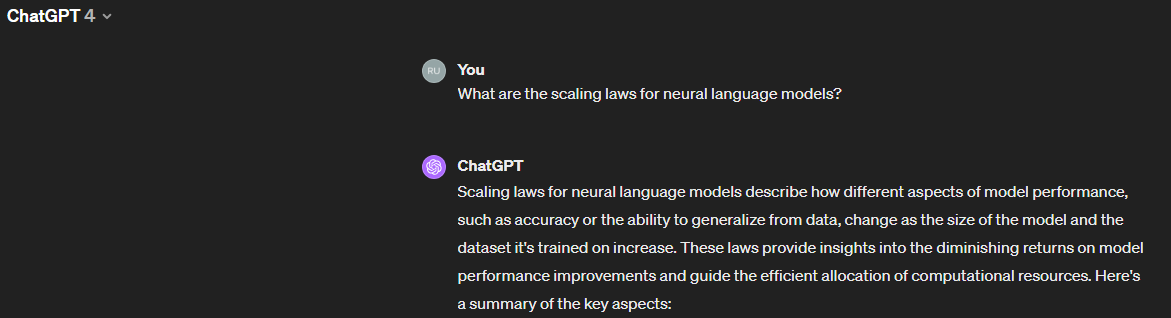

Before I go on, I want to define “AI.” Notice I have rarely mentioned “AGI” (Artificial General Intelligence). Good. That is the only time you will see that acronym. For me, “AI” is the introduction of LLMs as the next operating system and how people interface with information and data. I would rather talk about Scaling Law, Sora, and networks. Scaling Law is what caused the hyperscaler arms race.

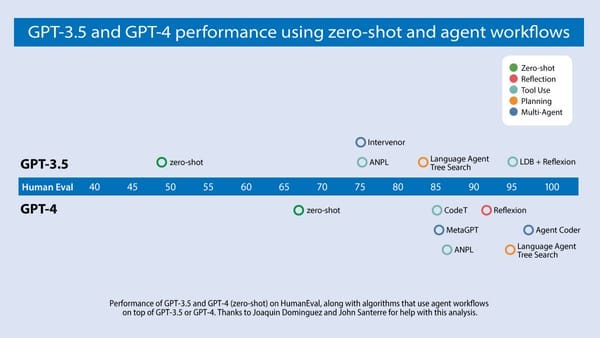

To prove a point, I asked GPT-4 (note I said GPT-4). GPT-4 went on a rant longer than that, but I really just wanted to see if it can put together a chart. It did. The OpenAI paper figure is included for comparison.

The most common misconception comes from people focusing on the “language” part of LLM. MLP (Multilayer Perceptron) and neural nets are not new concepts. If you really wanted to, you could oversimplify “AI” to just regression analysis, with a layers of weights that form a probability distribution. At a certain point, algorithm just become an association and classification problem. However, that’s the part where Scaling Laws comes in. It is the massive dataset and compute used to produce associations with predictive accuracy. How much longer can compute keep scaling without diminishing returns is a topic for another time. From high performance computing perspective, GPT-4 was a breakthrough. Whether it translates to physics-based simulations is a different story, as transport equations have required sequential computing to accurately resolve high gradient regions.

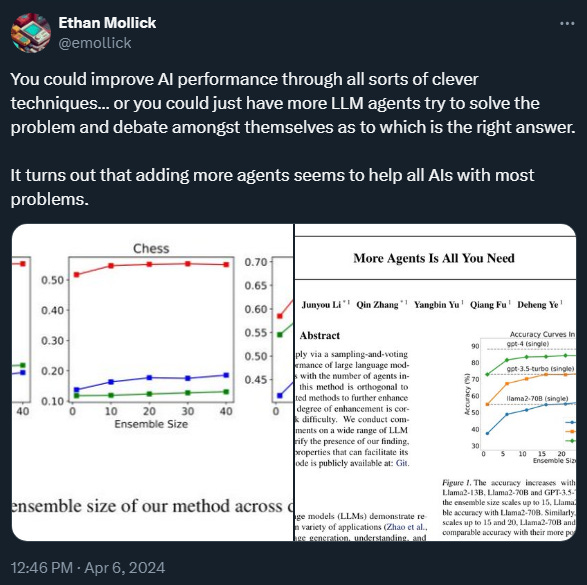

I recommend the recent Yann Lecun podcast, especially because he used the “knowledge circle”. I disagree with his conclusion because he is focusing on a “singular” world model. I believe it will be a network of models that forms the world model, much like how individual websites created the internet. However, I agree with him that LLMs cannot “reason,” in the sense that interpretability has not been achieved. Without interpretability, causal relationships can be missed and the algorithm cannot be said to be deterministic. Memory is being addressed with longer context lengths and planning is a breakdown of complex tasks into subtasks. Any task can be broken down. That is how tasks are executed, step by step.

GPT-4 is sufficient for massive adoption. At least that is what Microsoft Satya Nadella thinks, as there was a possibility OpenAI was about to be splinter on 11/17/2023 with eager sharks circling.

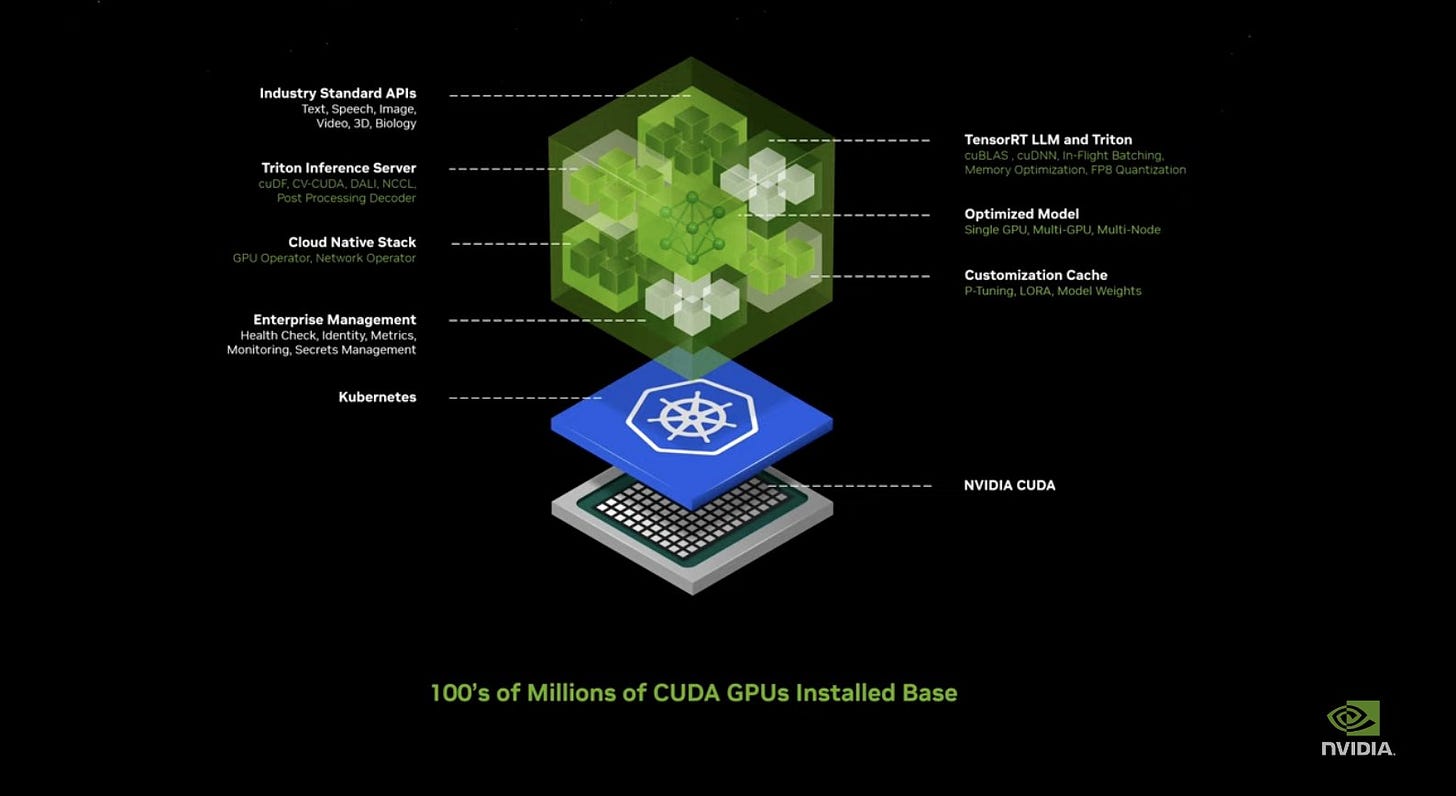

Now, I can talk about Nvidia GTC. The following picture is the essence of “knowledge compression.” If you do not like LLMs, Automation and the Metaverse, this is probably a good place to stop reading.

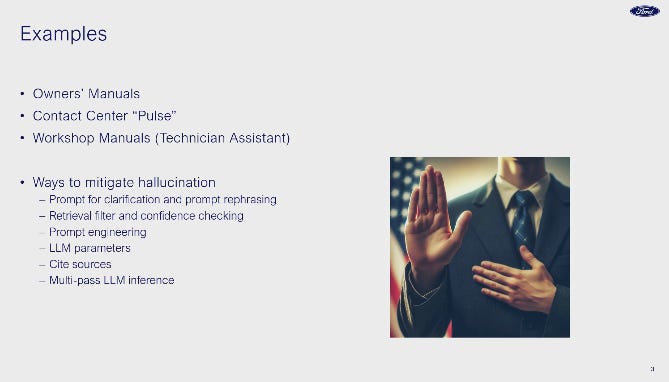

Every company in every sector is going to utilize some form of the NeMo framework and develop their own sector-specific customized LLMs and agents.

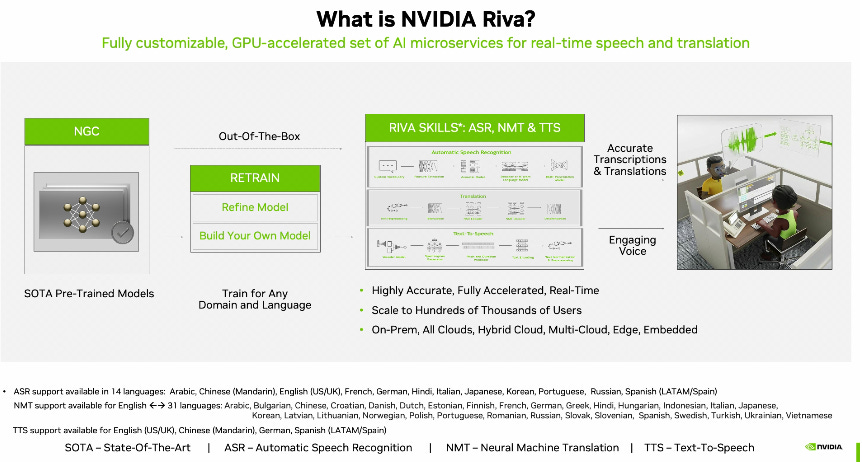

The term “agents” is going to be common soon. The future is multi-modal and autonomously augmented. It’s also multi-lingual.

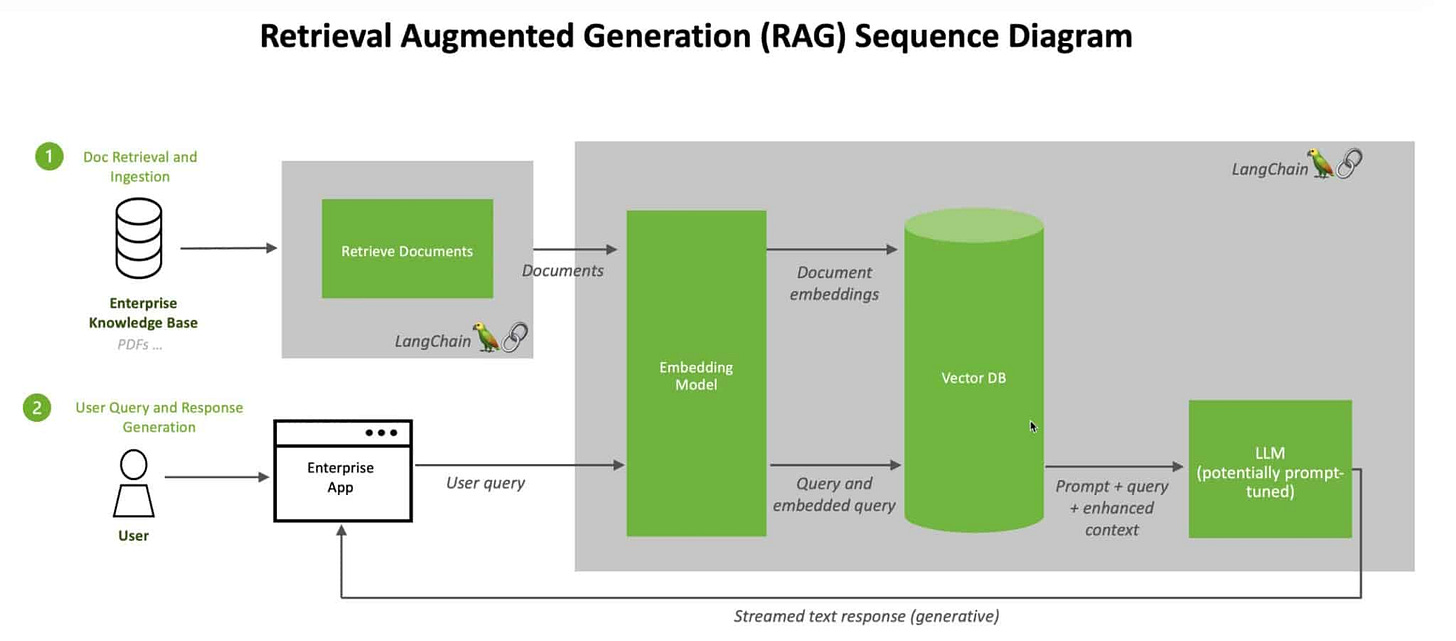

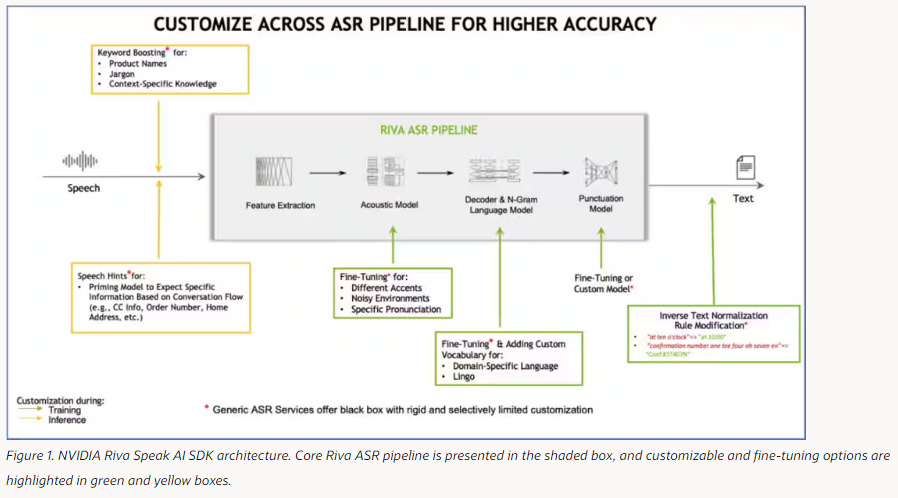

Most AI workflows revolve around figuring out the percentage of utilizing Retrieval-augmented generation (RAG) or finetuning the model into an agent. Call centers have already fully embraced this framework as speech-to-text has been achieved with relatively high accuracy and call transcripts have become structured data within data lakes.

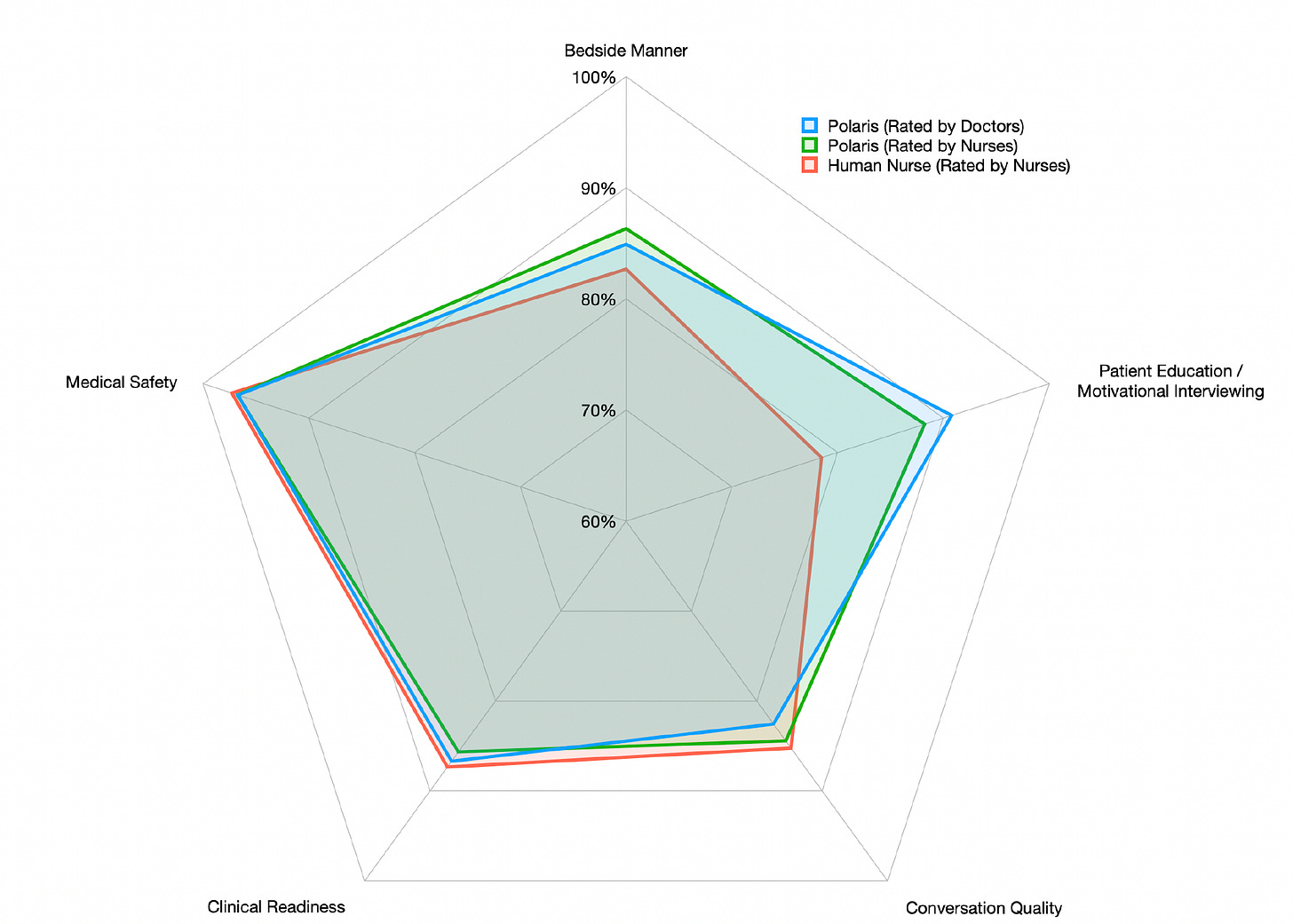

I believe the healthcare industry is undergoing transformation, led by Google, Oracle (Cerner) and Epic electronic health record (EHR) services. Hippocratic AI presented Polaris, “the first safety-focused large language model constellation for real-time patient-AI healthcare conversations.” Hippocratic AI recruited 1,100 nurses and 130 physicians to engage their >1 trillion parameter LLM for simulated patient actor conversations, often exceeding 20 minutes. Polaris performance, rated by nurses, was as good or better for each of the 5 parameters accessed.

The architecture of Polaris consists of multiple domain-specific agents to support the primary agent that is trained for nurse-like conversations, with natural language processing patient speech recognition and a digital human avatar face communicating to the patient. The support agents, with a range from 50 to 100 billion parameters, provide a knowledge resource for labs, medications, nutrition, electronic health records, checklist, privacy and compliance, hospital and payor policy, and need for bringing in a human-in-the-loop. This network of healthcare agents is just another demonstration of “network API”.

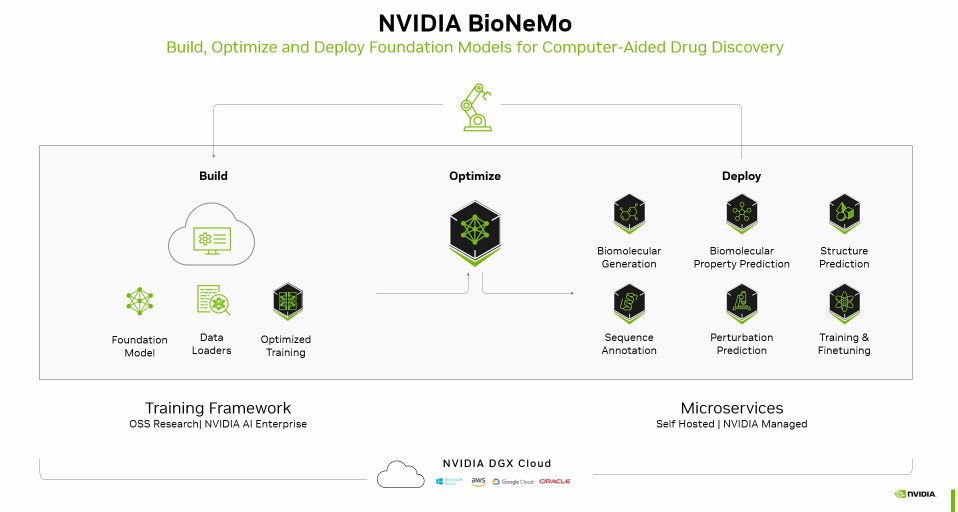

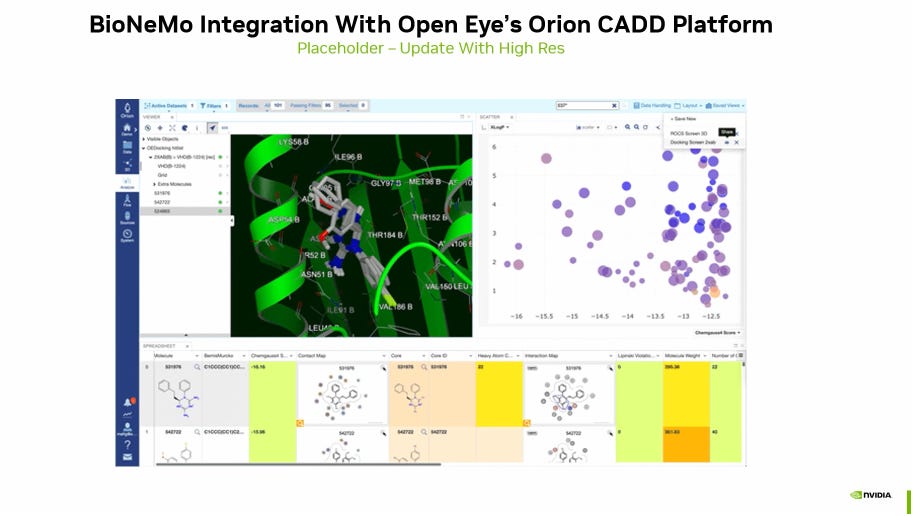

The list of FDA cleared algorithms continues to increase, ranging between drug discovery and radiology. The BioNeMo framework has been deployed for approximately a year now and has attracted big customers. Amgen and Novo Nordisk plan on leveraging this framework for drug discovery. National Health Service (NHS) has deployed Mia and used it to spot tiny cancers missed by doctors.

Business administration and manager/employee relationships are likely to be improved as well. ServiceNow deployed internal agents to receive admin requests and conduct onboarding, reporting overhead reduction. Gucci reported that their ServiceNow call center implementations improved employee job satisfaction as it enabled better customer interaction. There are parallels here with doctor and patient interactions in context of diagnostic/EHR agents. Bank of America’s Erica has accumulated significant customer interactions since launch. Do people start to call Ford an AI company just because they use RAG and deploy agents? The future of data is structured and it is going to be a quite a ride. Buckle up.

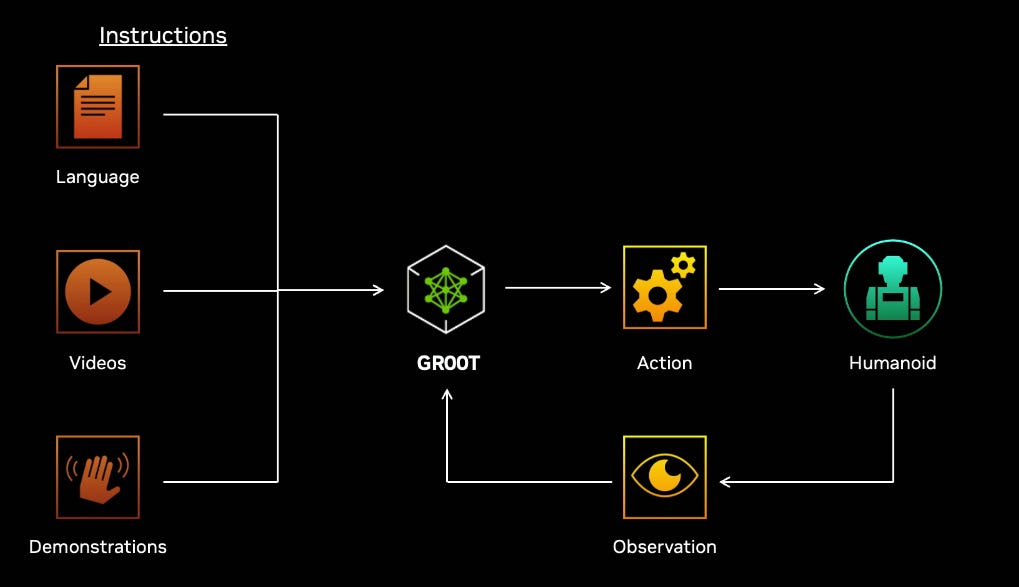

Automation is going to accelerate in many places, with generalized humanoids being the ultimate distant goal. In the meantime, customized agents for automation will help the American manufacturing industry catch up to China.

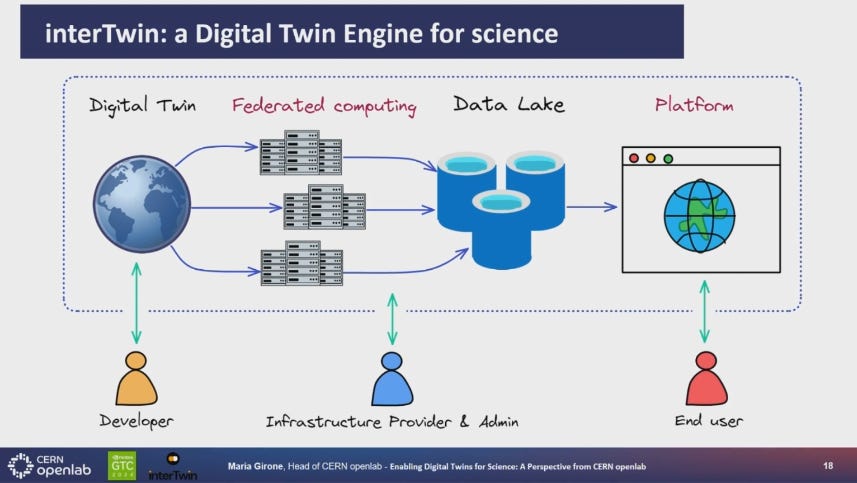

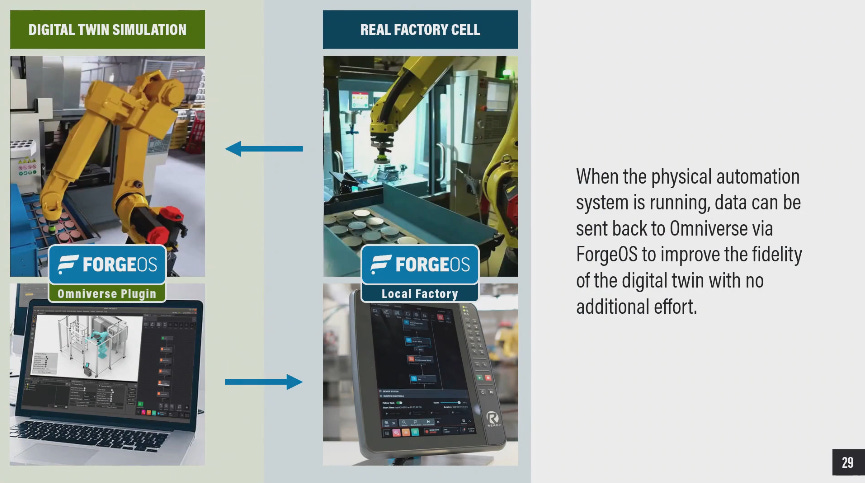

Automation is at the heart of Nvidia’s digital twins (the Omniverse was what Metaverse should have been, addressing industrial/retail applications instead of targeting conference calls and avatars). The list of enterprises utilizing digital twins will keep growing as OpenUSD grows as the default simulated platform. Data lake platforms are the primary beneficiaries as synthetic data grows exponentially. I have included a few slides from CERN and Lowe’s GTC presentations demonstrating how they are using their digital twins.

But wait, there is more. There are many companies fully embracing the agent + digital twin approach for their workflow. Here are five other companies showing how this approach was used to deploy a solution. With the common theme of simulation improves layout optimization and deploy cost. For most systems, the majority of the cost is decided in the early phases of the design cycle and this framework provides a way to conduct robust optimization early on. In addition, it can be used for real-time fault monitoring and maintenance after deployment.

It is not hard to see how Apple Vision Pro fits into this agent + digital twin framework. It is probably not a coincidence Apple was a founding member for OpenUSD, along with Pixar. Apple products have been emphasizing “ spatial computing” and people will find out why when text-to-3D through Gaussian Splatting becomes mainstream later this year. There are many that think Apple is lagging in innovation. It would not surprise me to see Apple have the best augmented reality application for this agent + digital twin framework within a year.

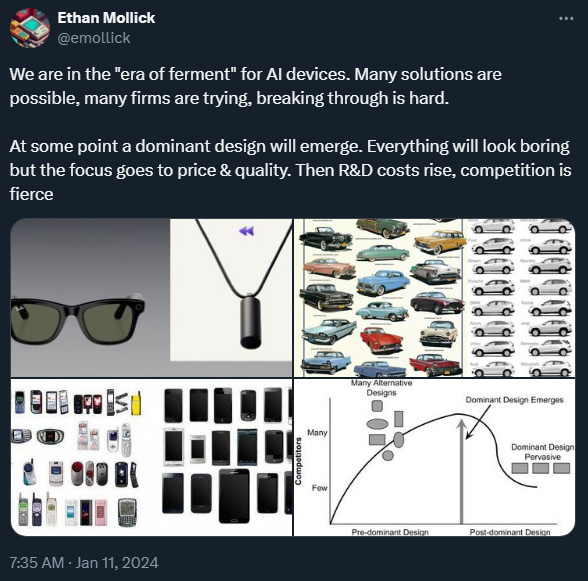

Automation and self-driving go hand in hand. The technology for computer vision, motion and trajectory planning directly translate. Generalized self-driving might still be some time away, but finetuned self driving is here. LLMs are being incorporated into planning networks for autonomous driving capabilities, with Google Waymo leading the way in the Bay Area and starting Uber Eats in Phoenix.

The retail and marketing sector are undergoing fast adoption, as content creation barriers have disappeared. L’Oreal provided some hints with how Hyper Individualization might look in the future.

The entertainment industry is under pressure to adapt. I believe the golden age of media is here and creative destruction is happening. True storytellers will adapt and survive.

One worry that I am starting to have is focus on modularity and optimization often leads to lost of “creativity” as solutions converge. I remain optimistic but will keep an eye out for humans losing curiosity. I am still a firm believer humans are innately curious.

Conclusion

In summary, GLP-1 and LLM’s coupled with code interpreter (renamed “Advanced Data Analysis”) is poised to more than offset deglobalization with respect to contribution to corporate capex and margin improvement, as productivity is increased for every sector. It is probable that the effects will be felt within a 3-6 months (noticed this has trended down since) and is faster than those treating GLP-1/AI as a Gartner Hype Cycle. The potential from weight loss and English as a programming language is still underestimated. The transmission and absorption of information is much faster now and Microsoft has deployed OpenAI enterprise solutions globally. With Nvidia DGX partnering with hyperscalers (Microsoft, Amazon, Google, Oracle, in that order) to deploy enterprise/sovereign solutions, that is a combination of agents and digital twins. If AI does bring productivity growth, it is plausible long rates are higher than pre-Covid, just for a different reason than the stagflation crowd thinks.